Embodied Memories

motion capturing | artificial intelligence | human-computer interaction | performance

Motion capturing recommendation system for memory

Motion capturing recommendation system for memory

roles: creative director | developer | prompt engineering | stage design manager

Motivation

How often do you wish you had a personal assistant guiding you through each moment of your day, enhancing your memory, and streamlining your routine effortlessly? Introducing Embodied Memories – a pioneering intervention that seamlessly intertwines real-time motion capture technology with a large-language model to strengthen your episodic and semantic memory in daily living.

Picture this: a motion capturing suit equipped with 17 cutting-edge sensors that detect your every move and orientation in 3D space. As you begin your day, turning off your alarm triggers a personalized response from an AI. You're greeted with a cheerful “good morning”, a brief weather update, insights into your daily schedule, a message from a loved one, and even helpful tips on how to navigate your commute to beat traffic. Your morning just got a little bit easier.

This intervention integrates into your daily life to subtly enhance memory retention while providing real-time information exactly when you need it. Hence, it becomes an “embodied memory“.

As an “accountability partner”, it has the potential to be a reliable companion that supports your daily routine and habits for memory augmentation. Are you ready to embrace a future where memory improvement seamlessly integrates into your daily life?

Performance Demo

Methodology

Through a combination of real-time motion analysis and large language models (LLMs), the full-body Shadow Motion-Capturing (MoCap) Suit and VIVE Tracker observe and interpret various pre-trained poses to enable sound trigger that aid episodic and semantic memory. A voice assistant then gives suggestions based on detected poses and can follow a designated checklist, like a “morning routine”. This recommendation system is sourced from pre-selected GPT-3.5 prompts with sound triggers bounded by a zone of activation. A person cannot trigger the system with certain poses, even if they do them, in a mismatched location. For instance, the “making coffee“ position cannot be triggered while a person is in the bathroom. Each pose is designed to trigger with a threshold met only in the 3D bounded boxes we created with the position-tracking system to symbolize different rooms in a house. The real-time system was created with a Python-based, GPU-Accelerated, and node-based GUI.

megan & I feeling proud after successfully putting on the full MoCap Suit in under 4 minutes!

me trying martial arts moves while wearing the motion capturing suit

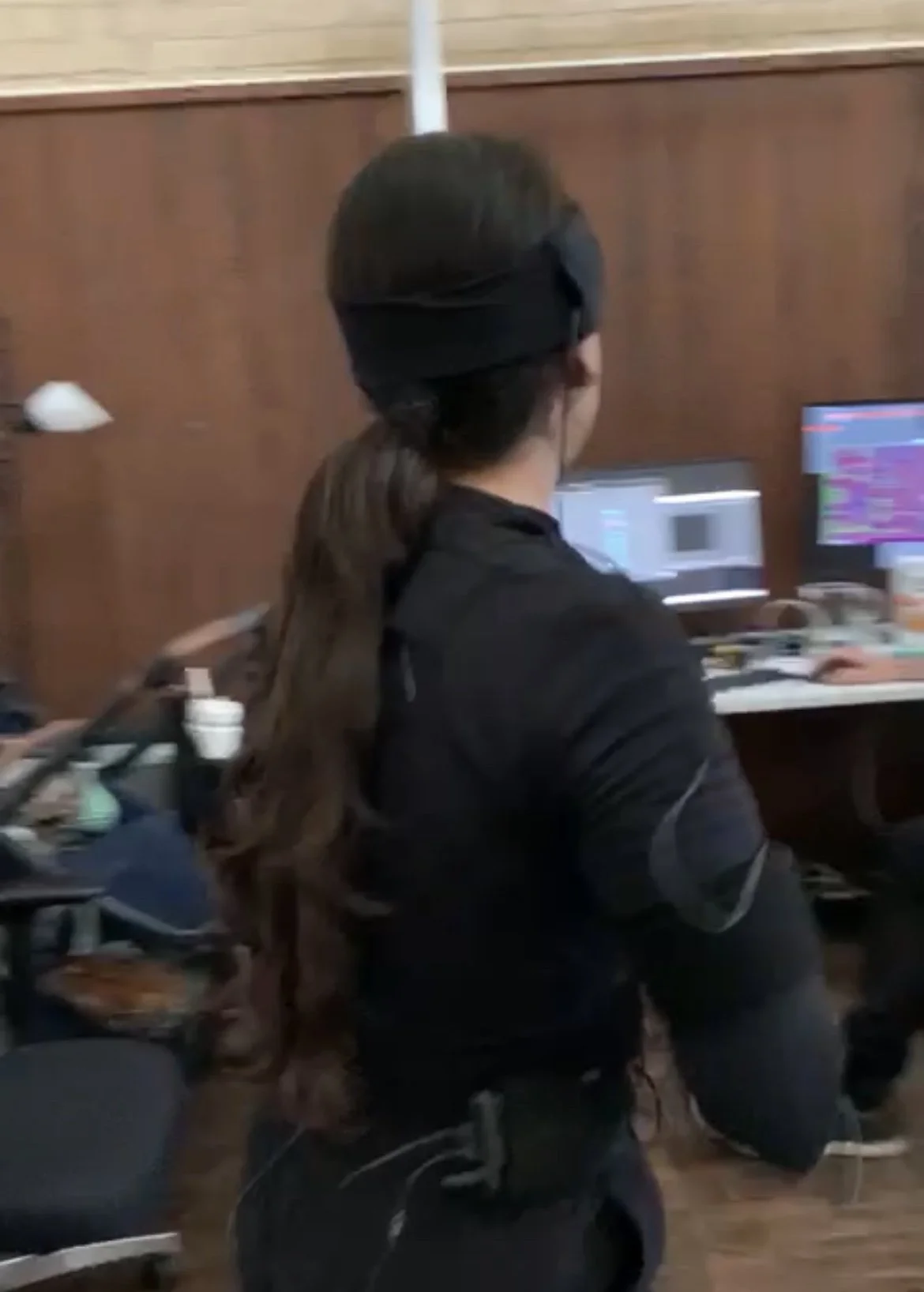

recording megan as she practices different movements to calibrate the pose detection system’s threshold for activation

streaming the real-time motion capturing pose detection software

Future Directions

This novel approach to memory enhancement not only assists in real-time but has the potential for lasting cognitive benefits. Especially for people who have dementia symptoms from old age or brain ailments like Alzheimer’s Disease. By reinforcing memory triggers through physical movements, this system is designed to improve both episodic and semantic memory over time with minimal caretaker guidance. It embeds daily experiences with social contexts and knowledge learned over many interactions to make each moment more meaningful with a lower activation energy needed.

Whether it's as simple as stretching, preparing breakfast, or exercising, tailored messages are dynamically relayed to reinforce memory recall in situ. Imagine effortlessly recalling your Grandma’s recipe while making dinner, prompted by a specific cooking pose. Or remembering important injury-prevention tips from your coach while striking a particular posture during your workout routine. The current corpus of embodied memories aims to entertain the public imagination to this impending reality.

Current corpus of embodied memories: